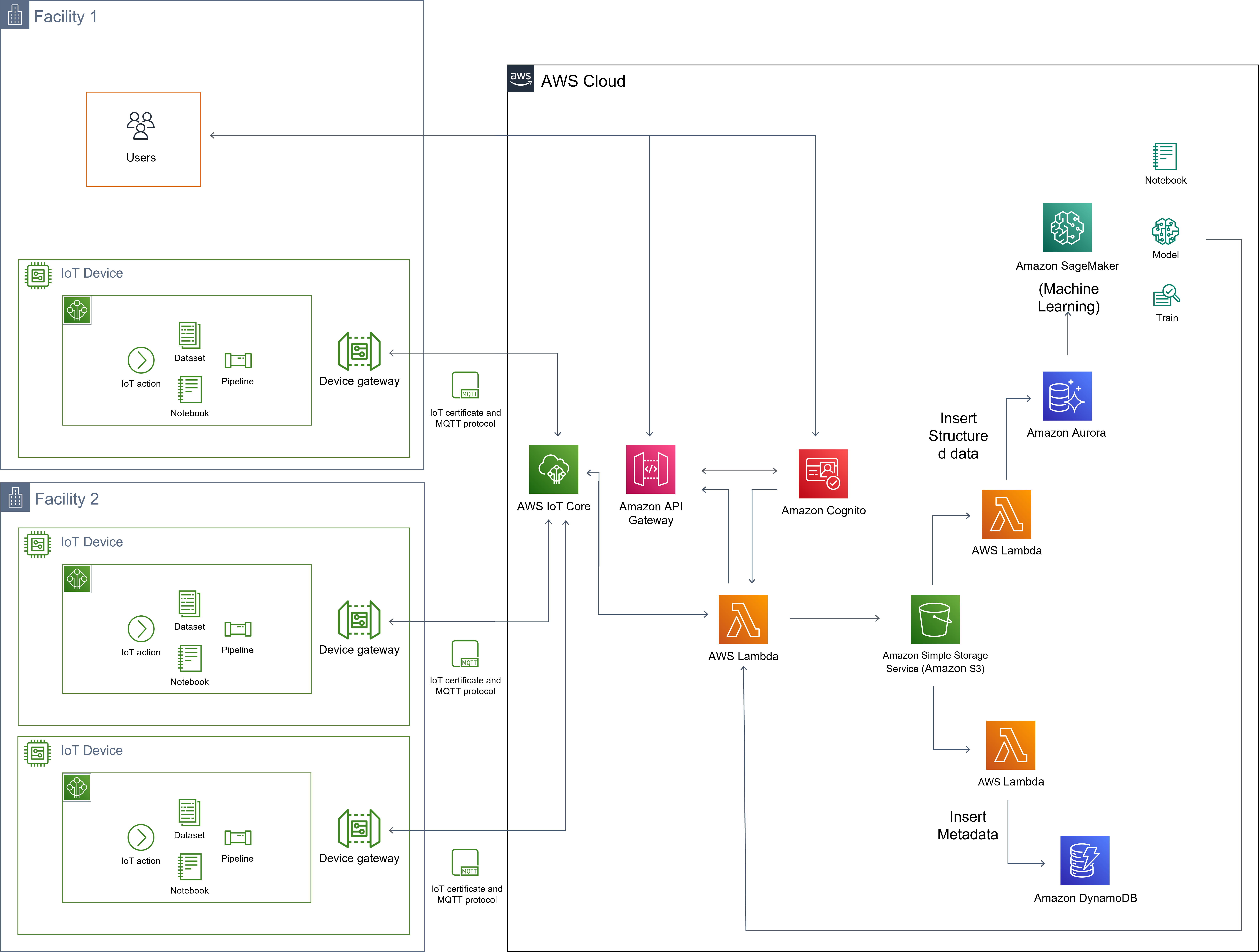

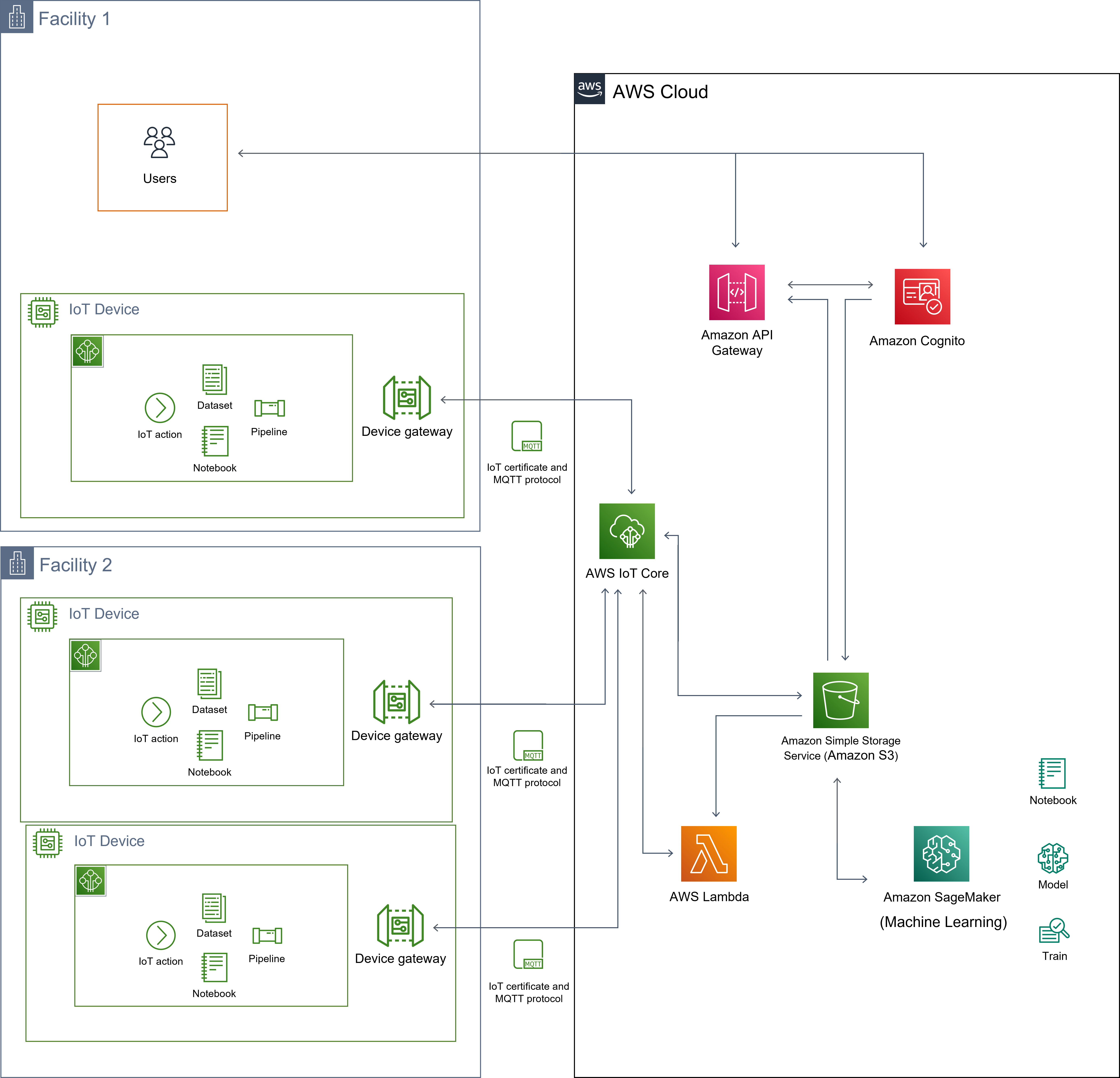

Cloud Architecture Design for IoT devices: microfluidic rheometers and machine learning

We want to design a cloud architecture to collect, process and respond to requests from IoT devices. These requests will come from devices in the same network (for instance, a Hospital) and will provide raw data to be processed by a pre-trained model. The main idea of the cloud is to be able to store all measurements together to improve the machine learning model easily.

The goal is to build a system that is robust and scalable, i.e. we need an infrastructure that can recover from failure and that can provide service to multiple devices.

DISCLAIMER: the following architecture has never been implemented nor deployed. It was thought of as a proof of concept.